ARIMA model To The Rescue

This is the second entry in our series of “Unplugged” tutorials, in which we delve into the details of each of the time series models with which you are already familiar, highlighting the underlying assumptions and driving home the intuitions behind them.

In financial time series and other fields, we often face a non-stationary time series, for example, traded security (e.g., stock, bond, commodity, etc.) price levels. In this case, the time series exhibits either trending, seasonality, or merely misguided (random) walk. Unfortunately, the bulk of time series and econometric methods can be applied only to stationary processes, so how do we handle this scenario?

In this issue, we tackle the ARIMA model – an extension of the ARMA model, but the ARIMA model applies to non-stationary time series – the kind of time series with one or more unit-roots (integrated).

Once again, we will start here with the ARIMA Excel process definition, stating the inputs, outputs, parameters, stability constraints, and assumptions. Then we will introduce the integration operator and draw a few guidelines for the modeling process.

Background

A non-stationary time series often exhibits a few common patterns including trend over time, seasonality, and misguided random walk. The trend or seasonality can also be classified as either deterministic (function of time) or stochastic (function of past values).

For stochastic trend and/or seasonality, we often difference (i.e. compute the change of) the original time series to induce a stationary series which can be further modeled by an ARMA type of process.

By definition, the auto-regressive integrated moving average (ARIMA Excel) process is an ARMA process for the differenced time series.

Alternatively, in a simple formulation, an ARIMA (p,d,q) is defined as follows:

$$\left(1-\sum_{i=1}^p{\phi_i L^i} \right )(1-L)^d Y_t =\mu + \left(1+ \sum_{j=1}^q{\theta_j L^j} \right )a_t$$

Or

$$\left(1-\sum_{i=1}^p{\phi_i L^i} \right )Z_t = \mu + \left(1+ \sum_{j=1}^q{\theta_j L^j} \right )a_t$$ $$\left(1-\sum_{i=1}^p{\phi_i L^i} \right )(Z_t-\mu) = \left(1+ \sum_{j=1}^q{\theta_j L^j} \right )a_t$$ $$Z_t=\Delta^d Y_t=(1-L)\times(1-L)\times \cdots \times (1-L) Y_t=(1-L)^d Y_t$$.

Where:

- $Y_t$ is the observed output at time t.

- $\Delta^d$ is the difference operator of order d.

- $Z_t$ is the differenced time series at time t.

- $a_t$ is the innovation, shock or error term at time t.

- ${a_t}$ time series observations:

- Are independent and identically distributed.

- Follow a Gaussian distribution (i.e. $\Phi(0,\sigma^2)$).

Assumptions

Looking closer at the formulation, we see that the ARIMA Excel process is essentially an ARMA process for the differenced time series aside from the difference operator ($\Delta^d$). The same assumption for an ARMA process applies here as well:

- The ARMA process generates a stationary time series $Z_t$.

- The residuals ${a_t}$ follow a stable Gaussian distribution.

- The components’ parameter $\{\phi_1,\phi_2,\cdots,\phi_p,\theta_1,\theta_2,\cdots,\theta_q\}$ values are constants.

- The parameter $\{\phi_1,\phi_2,\cdots,\phi_p,\theta_1,\theta_2,\cdots,\theta_q\}$ values yield a stationary process.

Sound simple? It is! A careful selection of the ARMA model parameters can guarantee a stationary process for the differenced time series ($Z_t$), but how do we interpret the forecast of $Y_t$ using $Z_t$.

Integration (un-difference) Operator

In many cases, we often apply a difference operator to yield a stationary time series that can be easily modeled using the ARMA type of model. But how do go back to the original un-differenced time series space and interpret the ARMA results (e.g. forecast)? Our best bet is to use the Integration Operator.

DEFINITION: a stochastic time series $\{Y_t\}$ is said to be integrated of order (d) (i.e. $Y_t\sim I(d)$) if the d-times differenced time series yields an invertible ARMA representation.

$$a_t=\frac{1-\sum_{i=1}^p {\phi_i L^i}}{1-\sum_{j=1}^q {\theta_j L^j}}\Delta^d Y_t =\frac{1-\sum_{i=1}^p {\phi_i L^i}}{1-\sum_{j=1}^q {\theta_j L^j}} Z_t=(1+\sum_{i=1}^\infty \pi_i L^i)Z_t$$ $$\sum_{i=1}^\infty {\left | \pi_i \right |}< \infty$$

And, implicitly;

$$\left(1-L\right)^d Y_t \sim \textrm{stationary}$$

Now, to recover $Y_t$ from the $(1-L)^d Y_t$, we apply the un-difference (integration) operator.

A first order integration can be expressed as:

$$Y_t=\frac{Z_t}{1-L}=Z_t \times (1+L+L^2+L^3+\cdots)=Z_t\sum_{i=0}^\infty L^i$$ $$Y_t=\sum_{i=0}^\infty Z_{t-i}$$ $$Y_{T+n}=Y_T + \sum_{i=1}^n Z_{T+i}$$

For higher order (i.e.$d$-order) integration, we simply integrate multiple times:

$$Y_t=\frac{Z_t}{(1-L)^d}=Z_t\times \frac{1}{1-L}\times \frac{1}{1-L}\times \cdots \times \frac{1}{1-L} = Z_t\times \left(\sum_{i=0}^\infty L^i \right )^d$$

For instance, for $d=2$, the integration operator is defined as follows:

$$Y_t=\frac{Z_t}{(1-L)^2}=Z_t\times \frac{1}{1-L}\times \frac{1}{1-L} = Z_t\times \left( 1+L+L^2+L^3+\cdots\right )^2$$ $$Y_t = Z_t (1+2L+3L^2+\cdots+(n+1)L^n+\cdots)=Z_t\sum_{i=0}^\infty {(i+1)L^i}$$ $$Y_{T+n}=Y_T+n\times W_T+\sum_{i=1}^{n-1}{(n+1-i)Z_{T+n-i}}$$

For $d=3$, the integration operator is defined as follows:

$$Y_t=\frac{Z_t}{(1-L)^3}=Z_t\times \frac{1}{1-L}\times \frac{1}{1-L} \times \frac{1}{1-L}= Z_t\times \left( 1+L+L^2+L^3+\cdots\right )^3$$ $$Y_t = Z_t (1+3L+6L^2+\cdots+\frac{(n+1)(n+2)}{2}L^n+\cdots)=Z_t\sum_{i=0}^\infty {\frac{(i+1)(i+2)}{2}L^i}$$ $$Y_{T+n}=Y_T+n\times W_T+\frac{n(n-1)}{2}V_T+\sum_{i=1}^{n-1}{\frac{(n+1-i)(n+2-i)}{2}Z_{T+n-i}}$$ $$W_T=Y_T-Y_{T-1}$$ $$V_T=W_T-W_{T-1}=Y_T-2Y_{T-1}+Y_{T-2}$$

Since $\{Y_t\}$ is an integrated timer series of order, then $Z_t$ is a stationary time series which has an invertible ARMA representation:

$$Y_t=a_t\sum_{k=0}^\infty {\psi_i L^i}\times \sum_{j=0}^\infty L^i\times \sum_{j=0}^\infty L^i\times \cdots \times \sum_{j=0}^\infty L^i = a_t\sum_{k=0}^\infty {\psi_i L^i}\times \sum_{k=0}^\infty {\zeta_i L^i}\\ $$

We can compute the conditional variance at time T+n given the information available at time T:

$$\textrm{Var}\left(Y_{T+n}\|Y_T Y_{T-1}\cdots Y_1 \right )=\textrm{Var}\left( a_t\sum_{k=0}^\infty {\psi_i L^i} \sum_{k=0}^\infty {\zeta_i L^i}\right )=\sigma_a^2\times \sum_{i=1}^{n-1}\gamma_i^2$$

Where:

$$\gamma_i=\sum_{k=0}^i{\zeta_{i-k}\times \psi_k}$$ $$\zeta_o=\psi_o=1$$

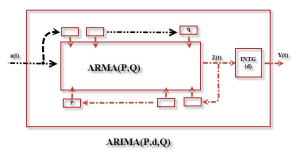

ARIMA Machine

The ARIMA Excel process is a simple machine that retains limited information about its past differenced outputs and the shocks it has experienced. In a more systematic view, the ARIMA process or machine can be viewed as below.

Note that we are observing the integrated output of the ARMA process ($Y_t$), but the machine processes the differenced outputs ($Z_t$). The INTG block references the integration operator.

How do we know if we have a unit-root in our time series?

Aside from the statistical tests for unit-root (e.g. ADF, KPSS, etc.), there are a few visual clues for detecting unit-root using the ACF and PACF plots. For instance, a time series with unit-root will exhibit high and very slow decaying ACF values for all lags. On the PACF plot, the PACF value for the first lag is almost one (1), and the PACF values for lag-order greater than one are insignificant.

For statistical testing, the Augmented Dickey-Fuller (ADF) test will examine the evidence for a unit root, even in the presence of a deterministic trend or squared time trend.

Statitical Characteristics

In our description of the ARIMA process, we highlighted a single input stimulus: shocks/innovations, emphasizing how they propagate throughout the ARIMA machinery to generate the observed output. The ARIMA Excel machine is basically an ARMA machine, but the output is integrated before we can observe it. How does this affect the output distribution?

Why do we care?

The statistical distribution (i.e.$\Psi$ ) of the output ($Y_{T+n}$) is pivotal for conducting a forecast and/or establishing a confidence interval at any future time (T+n).

$$Y_{T+n}\sim \Psi(\mu_{T+n},\sigma_{T+n}^2)$$ $$\mu_{T+n}-Z_{l}^{\alpha/2}\sigma_{T+n}\leqslant \hat Y_{T+n} \leqslant \mu_{T+n}+Z_{u}^{\alpha/2}\sigma_{T+n}$$

Where:

- $\hat Y_{T+n}$ is the out-of-sample forecast at time T+n.

- $Z_{l}^{\alpha/2}$ is the lower critical value for $\alpha/2$ significance level.

- $Z_{u}^{\alpha/2}$ is the upper critical value for $\alpha/2$ significance level.

- $\sigma_{T+n}^2$ is the conditional variance at time T+n.

By now, the importance of understanding the output statistical distribution should be clear. Now how do we go about forming that understanding?

Back to the definition, the differenced time series $\{Z_t\}$ is modeled as a stationary ARMA process. Let’s convert it to an infinite-order MA model:

$$(1-\sum_{i=1}^p{\phi_i L^i})Z_t=(1+\sum_{j=1}^q \theta_j L^j)a_t$$ $$Z_t=\frac{1+\sum_{j=1}^q \theta_j L^j}{1-\sum_{i=1}^p{\phi_i L^i}}=(1+\sum_{i=1}^\infty{\psi_i L^i})a_t=\sum_{i=0}^\infty{\psi_i L^i}a_t$$ $$\psi_o=1$$

Now, let’s recover the original time series from $\{Z_t\}$.

Example:

Let’s consider the following differenced series $Z_t=(1-L)Y_t$. To recover the $\{Y_t\}$ time series, we simply add up all the differences to date.

$$Z_T=Y_T-Y_{T-1}$$ $$Y_{T+n}=Y_T+\sum_{i=1}^n{Z_{T+i}}=Y_T+\left( \sum_{i=1}^n\sum_{j=0}^\infty{\psi_j L^j}\right ) a_{T+n}=Y_T+\sum_{i=1}^n\left(a_{T+i}\sum_{j=0}^{i-1}\psi_j \right )$$

Now, the variance of the forecast is expressed as follows:

$$\textrm{Var}\left(Y_{T+n} \right )=\sigma_a^2 \times \sum_{k=1}^n\left(\sum_{i=0}^{n-k} \psi_i \right )^2$$

As we see, although computing the forecast is a simple exercise of summing all prior differences, the variance calculation is much more involved.

Furthermore, as $n\gg 1$, the $Z_{T+n} \to \frac{\mu}{1-\sum_{i=1}^p{\phi_i}}$, so the $Y_{T+n}$ estimate/forecast asymptotically approaches the deterministic linear trend defined by $Y_T + \frac{n\times \mu}{1-\sum_{i=1}^p{\phi_i}}$.

Conclusion

In simple terms, an ARIMA process is merely an ARMA process whose outputs have gone through an integrator. The integrator causes the observed time series $\{Y_t\}$ to be non-stationary. The integration process introduces the unit-root into $\{Y_t\}$. Integrating multiple times introduces multiple unit-roots into the output time series. This is why the word “integrated” is used in ARIMA.

The main takeaway of this paper is that differencing is a special transformation procedure that is aimed to convert a non-stationary time series into a stationary one. Like all transformations, care must be taken when we interpret the results back into the original time series space.

Notice that the unit-root modeling (e.g. ARIMA) is intended to capture a stochastic trend and it is not suited for a deterministic trend. If you suspect the presence of a deterministic trend, you should explore this avenue first (i.e. regress over time). At that point, you may choose to take the residuals and apply an ARMA type of process to exploit any remaining dynamics.